Machine Learning

There are several different platforms for implementing and experimenting with machine learning algorithms. For example, the R caret and tidymodels packages/environments allows for common syntax to be applied to execute a wide variety of algorithms.

In this course, we will explore scikit-learn. This is a toolkit that expands the functionality of SciPy, a library for scientific computation with Python used extensively within mathematics, engineering, and the physical sciences in general. A large number of tool kits are available for expanding SciPy. A full list can be found here.

Scikit-learn specifically allows for:

- preprocessing data for use in predictive modeling

- training a variety of machine learning algorithms

- optimizing hyperparameters

- predicting to new data and assessing model performance

- visualizing models and results

Why do we care about machine learning? The primary reasons is that machine learning algorithms have been shown to be powerful tools for discovering patterns in data and modeling or predicting processes. They allow for a variety of prediction types including:

- Categorical: predict discrete categories, such as types of land cover or types of forests

- Binary/Probabilistic: differentiate two categories and/or predict the probability of a sample belonging to a certain class; for example, what is the likelihood that a location might experience a landslide in the near future?

- Continuous: predict continuous measures, such as depth to the water table or percent soil clay content; these are regression-type problems.

In this section, I will provide examples relating to a binary classification problem. Specifically, we will differentiate wetlands from uplands to produce an estimate of the probability of wetland occurrence at locations on the landscape based on local topographic variables.

After working through this module you will be able to:

- prepare data for input into machine learning models.

- optimize, train, and assess machine learning models.

- compare feature spaces and different algorithms in regards to model performance.

Optimize, Train, and Predict using Random Forest

As usual, we will begin by reading in the required libraries.

- Pandas: read in our training and validation data tables and store them as DataFrames

- NumPy: work with data arrays

- Matplotlib/Seaborn: visualize data

- Scikit-learn: implement machine learning in Python including data preprocessing, hyperparameter optimization, training, and assessment

In order to simplify the code, I am importing specific tools from scikit-learn. Here is a brief description of the specific tools.

- RandomForestClassifier(): implementation of random forest (RF) algorithm for classification

- KNeighborsClassifier(): implementation of k-Nearest Neighbor (k-NN) algorithm for classification

- SVC(): implementation of support vector machines (SVM) for classification

- confusion_matrix(): return confusion matrix for model assessment

- classification_report(): return several classification accuracy assessment metrics

- roc_curve(): calculate receiver operating characteristics curve (ROC) for probabilistic assessment

- roc_auc_score(): calculate area under the ROC curve (AUC)

- StandardScaler: standardize predictor variables

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

%matplotlib inline

from sklearn.ensemble import RandomForestClassifier

from sklearn.neighbors import KNeighborsClassifier

from sklearn.svm import SVC

from sklearn.model_selection import GridSearchCV

from sklearn.metrics import confusion_matrix

from sklearn.metrics import classification_report

from sklearn.metrics import roc_curve

from sklearn.metrics import roc_auc_score

from sklearn.preprocessing import StandardScaler

Get the Data

I begin by reading in the training and testing data, which will be used to train the models and assess the performance, respectively. Sometimes the test set is referred to as the validation data. This is accomplished with Pandas, since the data are stored in CSV files.

The "class" field represents the category being predicted:

- "wet": example of a wetland

- "not": example of not a wetland

All of the predictor variables represent different measures that quantify the conditions of the local terrain surface.

- "cost": distance from water bodies weighted by topographic slope

- "crv_arc": topographic curvature

- "crv_plane": plan curvature

- "crv_pro": profile curvature

- "ctmi": compound topographic moisture index (CTMI)

- "diss_5": terrain dissection (5 m radius)

- "diss_10": terrain dissection (10 m radius)

- "diss_20": terrain dissection (20 m radius)

- "diss_25": terrain dissection (25 m radius)

- "diss_a": terrain dissection average

- "rough_5": terrain roughness (5 m radius)

- "rough_10": terrain roughness (10 m radius)

- "rough_20": terrain roughness (20 m radius)

- "rough_25": terrain roughness (25 m radius)

- "rough_a": terrain roughness average

- "slp_d": topographic slope in degrees

- "sp_5": slope position (5 m radius)

- "sp_10": slope position (10 m radius)

- "sp_20": slope position (20 m radius)

- "sp_25" slope position (25 m radius)

- "sp_a": slope position average

It is common for examples to be provided as a single table. This will require the user to randomly split the data into training and testing sets. This can be accomplished using model_selection.train_test_split(). This function also allows for stratified random sampling based on a categorical or grouping variable.

train = pd.read_csv('D:/mydata/ml/training.csv')

test = pd.read_csv('D:/mydata/ml/validation.csv')

print(train.head())

class cost ctmi crv_arc crv_plane crv_pro diss_10 \

0 not 404.444000 9.72181 -0.376308 -0.270172 0.106136 0.596801

1 not 19.365499 7.81020 -0.501769 -0.095858 0.405911 0.127337

2 not 907.374023 5.83340 1.889750 0.630867 -1.258890 0.882726

3 not 407.984985 6.05410 0.668900 0.152399 -0.516501 0.490594

4 not 360.717987 6.85992 -0.878002 -0.237463 0.640539 0.421594

diss_20 diss_25 diss_5 ... rough_25 rough_5 rough_a slp_d \

0 0.423932 0.390846 0.559673 ... 3.54012 1.430060 2.59963 4.11556

1 0.070422 0.067852 0.150943 ... 3.12002 0.944582 2.02356 2.78634

2 0.776313 0.746689 0.974049 ... 2.67275 1.538140 2.21755 5.02015

3 0.504769 0.486941 0.592066 ... 3.29430 1.741610 2.53107 8.01975

4 0.338215 0.330672 0.464568 ... 3.46882 1.948190 2.76768 8.94219

sp_10 sp_20 sp_25 sp_5 sp_a dem_m

0 0.823059 0.356201 -0.31958 -0.115417 0.186066 544.406982

1 -2.120790 -6.384640 -9.49066 -0.599976 -4.649020 579.661987

2 4.615170 6.065980 5.46094 2.332760 4.618710 676.323975

3 0.584595 -2.148440 -3.32928 1.069950 -0.955795 620.539002

4 -3.208800 -9.338870 -10.89050 -0.280945 -5.929780 585.283997

[5 rows x 23 columns]

print(test.head())

class cost ctmi crv_arc crv_plane crv_pro diss_10 \

0 wet 0.000000 19.30950 -0.041820 0.0000 0.041820 0.070967

1 wet 0.000000 9.93015 -0.961718 0.0000 0.961718 0.091834

2 wet 0.656767 11.06300 -0.041820 0.0000 0.041820 0.580664

3 wet 0.171550 12.10270 0.000000 0.0000 0.000000 0.288487

4 wet 0.000000 9.09360 -0.209177 -0.0295 0.179677 0.159990

diss_20 diss_25 diss_5 ... rough_25 rough_5 rough_a slp_d \

0 0.049314 0.042373 0.069436 ... 2.75714 1.060430 1.932930 0.114367

1 0.054215 0.044775 0.072723 ... 2.20201 1.240590 1.796470 0.836766

2 0.128384 0.117649 0.500000 ... 1.22992 0.318957 0.740689 0.053913

3 0.084509 0.047502 0.000000 ... 1.48042 0.397072 0.863066 0.038122

4 0.049411 0.048077 0.199988 ... 1.99719 0.556684 1.194620 1.158880

sp_10 sp_20 sp_25 sp_5 sp_a dem_m

0 -2.442140 -6.259220 -8.46277 -0.819946 -4.496020 526.085022

1 -2.483700 -4.499270 -5.17249 -1.204160 -3.339900 627.888000

2 0.079712 -0.511047 -1.09052 -0.005432 -0.381821 628.497986

3 -0.202637 -0.728271 -1.38171 -0.112488 -0.606277 628.497986

4 -0.319031 -1.730900 -2.81525 -0.224976 -1.272540 628.903992

[5 rows x 23 columns]

Next, I separate out the dependent variable ("class") from the predictor variables to create a "train_y" object. To separate out the predictor variables, I drop the "class" column. I also drop 'dem_m", which represents the elevation and will not be used as a predictor variable. This process is then repeated for the test data.

train_y = train['class']

train_x = train.drop(columns=['class', 'dem_m'])

test_y = test['class']

test_x = test.drop(columns=['class', 'dem_m'])

print(train_y.head())

print(train_x.head())

0 not

1 not

2 not

3 not

4 not

Name: class, dtype: object

cost ctmi crv_arc crv_plane crv_pro diss_10 diss_20 \

0 404.444000 9.72181 -0.376308 -0.270172 0.106136 0.596801 0.423932

1 19.365499 7.81020 -0.501769 -0.095858 0.405911 0.127337 0.070422

2 907.374023 5.83340 1.889750 0.630867 -1.258890 0.882726 0.776313

3 407.984985 6.05410 0.668900 0.152399 -0.516501 0.490594 0.504769

4 360.717987 6.85992 -0.878002 -0.237463 0.640539 0.421594 0.338215

diss_25 diss_5 diss_a ... rough_20 rough_25 rough_5 rough_a \

0 0.390846 0.559673 0.492813 ... 3.21397 3.54012 1.430060 2.59963

1 0.067852 0.150943 0.104138 ... 2.61464 3.12002 0.944582 2.02356

2 0.746689 0.974049 0.844944 ... 2.59621 2.67275 1.538140 2.21755

3 0.486941 0.592066 0.518593 ... 2.88848 3.29430 1.741610 2.53107

4 0.330672 0.464568 0.388762 ... 3.20197 3.46882 1.948190 2.76768

slp_d sp_10 sp_20 sp_25 sp_5 sp_a

0 4.11556 0.823059 0.356201 -0.31958 -0.115417 0.186066

1 2.78634 -2.120790 -6.384640 -9.49066 -0.599976 -4.649020

2 5.02015 4.615170 6.065980 5.46094 2.332760 4.618710

3 8.01975 0.584595 -2.148440 -3.32928 1.069950 -0.955795

4 8.94219 -3.208800 -9.338870 -10.89050 -0.280945 -5.929780

[5 rows x 21 columns]

Machine learning algorithms commonly have user-defined parameters that are not learned by the model and must be set by the user. These are known as hyperparameters. Default options are provided, but optimizing these settings may improve model performance.

How do you know what values to use? In order to select parameters, it is necessary to test a variety of combinations. In this example, I am using a grid search and k-fold cross validation to assess the model performance using all possible combinations of a set of provided values for different hyperparameters.

This requires me to:

- Create a dictionary with the parameter names and the values to test, provided as a list within the dictionary.

- Initiate a model, RF in this case.

- Define the grid search parameters; here I am specifically using 5-fold cross validation.

If you decide to execute this code, note that it may take some time as a large number of models have to be generated and assessed.

param_grid = {

'bootstrap': [True],

'max_depth': [80, 90, 100, 110],

'max_features': [2, 3],

'min_samples_leaf': [3, 4, 5],

'min_samples_split': [8, 10, 12],

'n_estimators': [100, 200, 300, 1000]

}

rf = RandomForestClassifier()

grid_search = GridSearchCV(estimator = rf, param_grid = param_grid,

cv = 5, n_jobs = -1, verbose = 2)

Now, I perform the grid search. A total of 1,440 models are fitted and evaluated using cross validation.

# Fit the grid search to the data

grid_search.fit(train_x, train_y)

Fitting 5 folds for each of 288 candidates, totalling 1440 fits

GridSearchCV(cv=5, estimator=RandomForestClassifier(), n_jobs=-1,

param_grid={'bootstrap': [True], 'max_depth': [80, 90, 100, 110],

'max_features': [2, 3], 'min_samples_leaf': [3, 4, 5],

'min_samples_split': [8, 10, 12],

'n_estimators': [100, 200, 300, 1000]},

verbose=2)

Once the grid search is complete, I obtain the list of selected, optimal parameters from the parameters that were tested. I then make a copy of the model that used the selected parameters and apply it to predict the test data. When using the model to make a prediction, you only have to provide the predictor variables, "test_x" in this case.

grid_search.best_params_

{'bootstrap': True,

'max_depth': 80,

'max_features': 2,

'min_samples_leaf': 3,

'min_samples_split': 10,

'n_estimators': 100}

rf_mod = grid_search.best_estimator_

print(rf_mod)

rfc_pred = rf_mod.predict(test_x)

RandomForestClassifier(max_depth=80, max_features=2, min_samples_leaf=3,

min_samples_split=10)

Once predications are made to the new, withheld test data, assessment metrics can be generated by comparing the predictions to the actual or correct classifications.

First, I generate a confusion matrix. Of the 2,000 points, only 77 were misclassified, suggesting strong model performance. Next, I generate a variety of assessment metrics using the classification_report() function. Calculated metrics include precision, recall, and the F1 score.

If you run this code, you may get a slightly different answer. This is because there is some randomness in the model. To ensure reproducibility, you can set a random seed.

print(confusion_matrix(test_y,rfc_pred))

[[941 59]

[ 18 982]]

print(classification_report(test_y, rfc_pred))

precision recall f1-score support

not 0.98 0.94 0.96 1000

wet 0.94 0.98 0.96 1000

accuracy 0.96 2000

macro avg 0.96 0.96 0.96 2000

weighted avg 0.96 0.96 0.96 2000

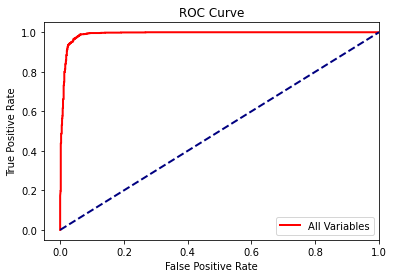

In order to assess the model using ROC and AUC, I will need to obtain the predicted class probabilities as opposed to the "hard" or binary classification. This can be accomplished using predict_proba(). This will result in a prediction for both classes ("not" and "wet") that sum to 1. Larger probabilities for the "wet" class indicate that the algorithm predicted that that site was likely to be a wetland based on its local topographic characteristics.

rfc_prob = rf_mod.predict_proba(test_x)

An ROC curve is easiest to calculate when the positive class is coded as 1 and the absent or negative class is coded as 0. So, I create a new version of "test_y" where "wet" has been coded to 1 and "not" has been coded to 0. This is accomplished using list comprehension.

In the last line of code, I calculate the AUC score using the probabilities from the second column (index 1). This is because we are interested in the probabilities for the positive class as opposed to the negative class.

A large AUC of 0.99 is obtained, suggesting strong performance.

test_y2 = test_y.to_numpy()

my_dict = {"not":0, "wet":1}

test_y3 = np.asarray([my_dict[zi] for zi in test_y2])

roc_auc_score(test_y3, rfc_prob[:,1])

0.9902489999999999

Next, I calculate the ROC curve and plot the result using matplotlib. The roc_curve() function produces multiple outputs, which can be accessed separately using tuple unpacking.

fpr, tpr, _ = roc_curve(test_y3, rfc_prob[:, 1])

plt.plot(fpr,tpr, color ='red', lw=2, label="All Variables")

plt.plot([0, 1], [0, 1], color='navy', lw=2, linestyle='--')

plt.xlim([-.05, 1.0])

plt.ylim([-.05, 1.05])

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

plt.title('ROC Curve')

plt.legend(loc="lower right")

plt.show()

Compare Feature Spaces

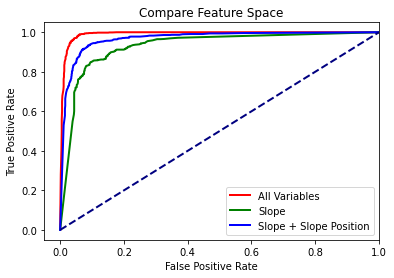

Now that we have worked through the entire process, I will provide some examples of comparing different models and different algorithms. In this example specifically, we will assess three different models that use different sets of local terrain features:

- All terrain variables

- Just topographic slope

- Topographic slope + Slope position

First, I create the data subsets. When creating the data for just slope, I have to explicitly define the output as a DataFrame. Since only one column is present, the default is to output a Series.

train_y = train['class']

train_x = train.drop(columns=['class', 'dem_m'])

test_y = test['class']

test_x = test.drop(columns=['class', 'dem_m'])

train_x_slp = pd.DataFrame(train['slp_d'])

test_x_slp = pd.DataFrame(test['slp_d'])

train_x_slp_sp = train[['slp_d', 'sp_a']]

test_x_slp_sp = test[['slp_d', 'sp_a']]

Next, I fit the three different models. I am not optimizing the hyperparameters for the sake of time. However, for a more robust experiment, each model should be optimized separately.

rf1 = RandomForestClassifier(n_estimators=200)

rf_model1 = rf1.fit(train_x, train_y)

rf2 = RandomForestClassifier(n_estimators=200)

rf_model2 = rf2.fit(train_x_slp, train_y)

rf3 = RandomForestClassifier(n_estimators=200)

rf_model3 = rf3.fit(train_x_slp_sp, train_y)

Once the models are fitted, I then use them to predict the test data and assess using the classification metrics. The model with all the terrain parameters performs best, followed by slope + slope position. The slope model was the poorest; however, precision, recall, and the F1 score are all still above 0.85, suggesting that topographic slope is an important predictor of wetland occurrence. However, the inclusion of additional predictor variables does improve performance; even if only slope position is added, the overall accuracy increases by 6%.

pred_model1= rf_model1.predict(test_x)

pred_model2 = rf_model2.predict(test_x_slp)

pred_model3 = rf_model3.predict(test_x_slp_sp)

print(classification_report(test_y, pred_model1))

print(classification_report(test_y, pred_model2))

print(classification_report(test_y, pred_model3))

precision recall f1-score support

not 0.98 0.95 0.96 1000

wet 0.95 0.98 0.96 1000

accuracy 0.96 2000

macro avg 0.96 0.96 0.96 2000

weighted avg 0.96 0.96 0.96 2000

precision recall f1-score support

not 0.87 0.86 0.86 1000

wet 0.86 0.87 0.86 1000

accuracy 0.86 2000

macro avg 0.86 0.86 0.86 2000

weighted avg 0.86 0.86 0.86 2000

precision recall f1-score support

not 0.92 0.93 0.92 1000

wet 0.93 0.92 0.92 1000

accuracy 0.92 2000

macro avg 0.92 0.92 0.92 2000

weighted avg 0.92 0.92 0.92 2000

In order to further explore and compare the results, I calculate the AUC measures and the ROC curves. Using matplotlib, I plot all the curves in the same graph space for comparison.

Again, these metrics suggest that using all variables provides the strongest performance followed by slope + slope position.

test_y2 = test_y.to_numpy()

my_dict = {"not":0, "wet":1}

test_y3 = np.asarray([my_dict[zi] for zi in test_y2])

rfc_prob1 = rf_model1.predict_proba(test_x)

rfc_prob2 = rf_model2.predict_proba(test_x_slp)

rfc_prob3 = rf_model3.predict_proba(test_x_slp_sp)

print("All Variables: " + str(roc_auc_score(test_y3, rfc_prob1[:,1])))

print("Just Slope: " + str(roc_auc_score(test_y3, rfc_prob2[:,1])))

print("Slope + Slope Position: " + str(roc_auc_score(test_y3, rfc_prob3[:,1])))

All Variables: 0.990852

Just Slope: 0.9291254999999999

Slope + Slope Position: 0.967622

print(rfc_prob1)

[[0. 1. ]

[0.06 0.94]

[0. 1. ]

...

[1. 0. ]

[0.95 0.05]

[1. 0. ]]

fpr1, tpr1, _ = roc_curve(test_y3, rfc_prob1[:, 1])

fpr2, tpr2, _ = roc_curve(test_y3, rfc_prob2[:, 1])

fpr3, tpr3, _ = roc_curve(test_y3, rfc_prob3[:, 1])

plt.plot(fpr1,tpr1, color ='red', lw=2, label="All Variables")

plt.plot(fpr2,tpr2, color ='green', lw=2, label="Slope")

plt.plot(fpr3,tpr3, color ='blue', lw=2, label="Slope + Slope Position")

plt.plot([0, 1], [0, 1], color='navy', lw=2, linestyle='--')

plt.xlim([-.05, 1.0])

plt.ylim([-.05, 1.05])

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

plt.title('Compare Feature Space')

plt.legend(loc="lower right")

plt.show()

Compare Algorithms

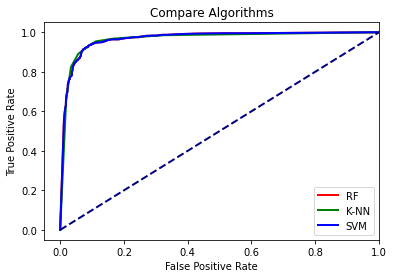

We will now compare three different machine learning algorithms: RF, k-NN, and SVM. Specifically, we will only use the topographic slope and slope position predictor variables.

In this first set of code I am preparing the training and testng data. RF is not generally sensitive or impacted by the predictor variable scale, which is also true for other tree-based models, such as single decision trees and boosted decision trees. However, SVM, a kernel-based method, and k-NN are impacted. So, it is necessary to rescale the data prior to using them in the prediction.

This is accomplished using StandardScaler(). This is fit to the training data, and the results are used to rescale both the testing and training data. This is so that the model is not biased by seeing a representation of the testing data prior to training and so that the data sets share a common transformation.

All subsequent modeling is conducted on the rescaled data.

train_x_sub = train[['slp_d', 'sp_a']]

test_x_sub = test[['slp_d', 'sp_a']]

scaler = StandardScaler()

scale_fit = scaler.fit(train_x_sub)

train_x2 = pd.DataFrame(scale_fit.transform(train_x_sub))

train_x2.columns = ['slp_d', 'sp_a']

test_x2 = pd.DataFrame(scale_fit.transform(test_x_sub))

test_x2.columns = ['slp_d', 'sp_a']

print(train_x_sub.head())

print(train_x2.head())

slp_d sp_a

0 4.11556 0.186066

1 2.78634 -4.649020

2 5.02015 4.618710

3 8.01975 -0.955795

4 8.94219 -5.929780

slp_d sp_a

0 -0.520301 0.237190

1 -0.667147 -0.580154

2 -0.420365 0.986504

3 -0.088983 0.044165

4 0.012924 -0.796660

Once the data are prepared, I next initiate the three models then train them using the rescaled training data. Again, the models should be optimized for a more rigorous experiment. However, I am not doing so here to save time.

rf_comp = RandomForestClassifier(n_estimators=200)

knn_comp = KNeighborsClassifier(n_neighbors=7)

svm_comp = SVC()

rf_compM = rf_comp.fit(train_x2, train_y)

knn_compM = knn_comp.fit(train_x2, train_y)

svm_compM = rf_comp.fit(train_x2, train_y)

I then predict to the rescaled test data using all three models and compare them using classification metrics (accuracy, precision, recall, and the F1 score) and ROC curves.

The results suggest that all three models have similar performance for this specific problem.

pred_rf_comp= rf_compM.predict(test_x2)

pred_knn_comp = knn_compM.predict(test_x2)

pred_svm_comp = svm_compM.predict(test_x2)

print(classification_report(test_y, pred_rf_comp))

print(classification_report(test_y, pred_knn_comp))

print(classification_report(test_y, pred_svm_comp))

precision recall f1-score support

not 0.92 0.92 0.92 1000

wet 0.92 0.92 0.92 1000

accuracy 0.92 2000

macro avg 0.92 0.92 0.92 2000

weighted avg 0.92 0.92 0.92 2000

precision recall f1-score support

not 0.93 0.91 0.92 1000

wet 0.91 0.93 0.92 1000

accuracy 0.92 2000

macro avg 0.92 0.92 0.92 2000

weighted avg 0.92 0.92 0.92 2000

precision recall f1-score support

not 0.92 0.92 0.92 1000

wet 0.92 0.92 0.92 1000

accuracy 0.92 2000

macro avg 0.92 0.92 0.92 2000

weighted avg 0.92 0.92 0.92 2000

test_y2 = test_y.to_numpy()

my_dict = {"not":0, "wet":1}

test_y3 = np.asarray([my_dict[zi] for zi in test_y2])

rf_comp_prob = rf_compM.predict_proba(test_x2)

knn_comp_prob = knn_compM.predict_proba(test_x2)

svm_comp_prob = svm_compM.predict_proba(test_x2)

print("RF: " + str(roc_auc_score(test_y3, rf_comp_prob[:,1])))

print("K-NN: " + str(roc_auc_score(test_y3, knn_comp_prob[:,1])))

print("SVM: " + str(roc_auc_score(test_y3, svm_comp_prob[:,1])))

RF: 0.9673204999999999

K-NN: 0.9650399999999999

SVM: 0.9673204999999999

fpr1, tpr1, _ = roc_curve(test_y3, rf_comp_prob[:, 1])

fpr2, tpr2, _ = roc_curve(test_y3, knn_comp_prob[:, 1])

fpr3, tpr3, _ = roc_curve(test_y3, svm_comp_prob[:, 1])

plt.plot(fpr1,tpr1, color ='red', lw=2, label="RF")

plt.plot(fpr2,tpr2, color ='green', lw=2, label="K-NN")

plt.plot(fpr3,tpr3, color ='blue', lw=2, label="SVM")

plt.plot([0, 1], [0, 1], color='navy', lw=2, linestyle='--')

plt.xlim([-.05, 1.0])

plt.ylim([-.05, 1.05])

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

plt.title('Compare Algorithms')

plt.legend(loc="lower right")

plt.show()

Concluding Remarks

My goal here was to provide a practical introduction to using scikit-learn for machine learning-based predictive modeling. You should now have a general understanding of how to prepare data, optimize algorithms, train models, and assess model performance.